Preventing insider attacks is getting more difficult

The attack surface has been evolving, making it increasingly difficult to detect and prevent insider attacks. The prevalence of BYOD, the proliferation of SaaS tools and applications, and the migration of data to the cloud have changed the nature of the corporate perimeter. The variety, breadth, and dispersed nature of access points make it harder for you to control the security environment and give attackers the upper hand in hiding their tracks.

These changes have cybersecurity experts and IT departments concerned about users accessing systems outside the corporate perimeter leading to an increased likelihood of data leakage.

It’s not only that there are more devices used to access the corporate network; it’s that so many of the phones and laptops are unsecured, making it harder for you to detect rogue devices within the forest of benign ones.

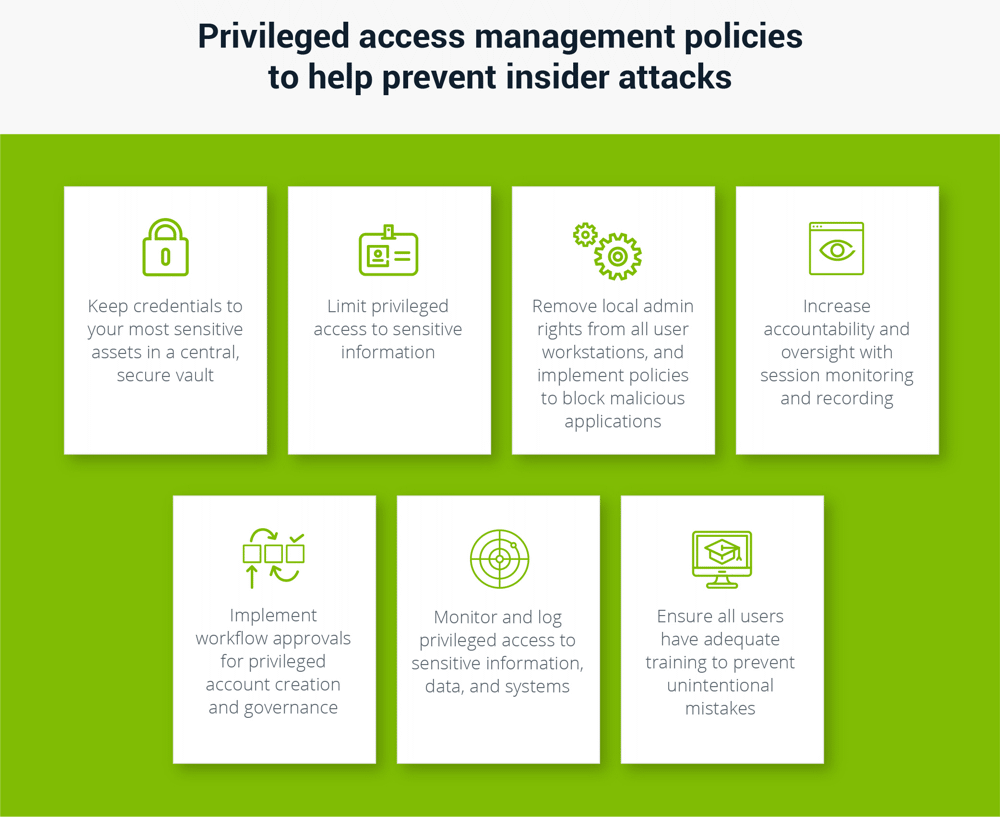

On average, nearly a quarter of all employees are privileged users. Privileged users have access to a wider array of sensitive systems and data than standard users. Some privileged users may have a legitimate need for that increased access. But not everyone does. When so many insiders have elevated privileges, it’s hard to differentiate between legitimate and aberrant behavior.

These days, during the COVID-19 pandemic, there are many more “insiders” working outside of an organization. People working remotely expect and need the same access to systems that they have while in the office. Yet, IT teams have less visibility and control, which increases the risk of insider threats.

When proper off-boarding protocols are forgotten, the gaps give former employees opportunities to exact revenge

In some organizations, employees are being let go. When this happens quickly, proper off-boarding protocols and processes may be forgotten. Privileged accounts may remain enabled, employees may retain company-issued laptops, and passwords may not be changed or disabled as they should. Gaps like these give former employees the opportunity to steal IP, plant malware, and exact revenge.

As all of these risk factors increase, insiders (and the criminals who stalk them) have become more sophisticated in their use of technology, their ability to cover their tracks, and to navigate corporate networks surreptitiously.

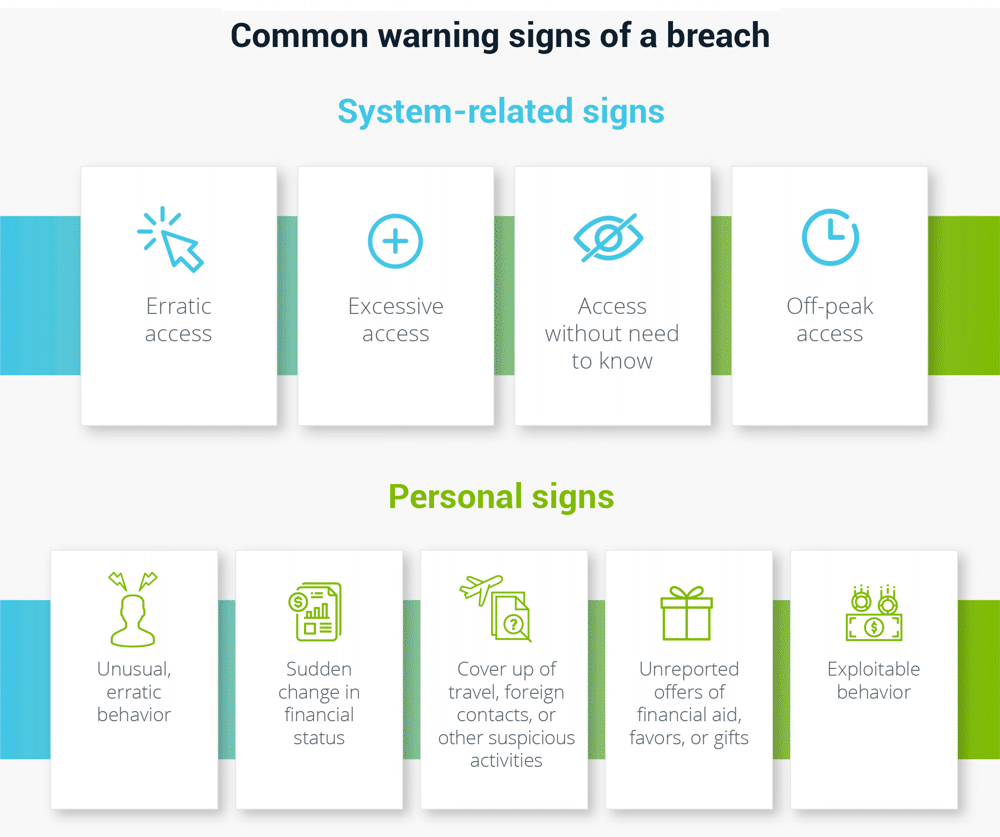

That said, it’s possible to detect insider threats before they cause damage. First, let’s explore some high-profile insider threats from the past few years. Then, I’ll cover how these types of breaches could have been discovered and possibly prevented.