PAM maturity model for DevOps

Security is a journey, not a destination. It’s a mindset, an adventure, and sometimes a tedious process. In DevOps, security needs to be baked-in and implemented step-by-step, rather than applied as an afterthought.

If you’re a developer, operations engineer, or manager of either, then this probably applies to you. If Kubernetes, pipelines, CI/CD, Infrastructure as Code, GitHub Actions, machine identity, and secrets are a large part of your team discussions, then this definitely applies to you!

Delinea’s Privileged Access Management (PAM) Maturity Model maps out the PAM adoption journey. It’s easy to get overwhelmed when all the steps are jumbled together. By understanding the step-by-step process, we can move forward with confidence and achieve important PAM milestones. I’m going to outline some milestones specific to DevOps workflows.

The human users in your organization need privileged access to operate. So do the machines. And just like our human users, we want the machine identities to have just enough privilege to do their job and not share secrets with other users, machine or human. Unlike our human users though, it doesn’t make sense for machines to ask permission for privilege. In addition, in modern organizations there are orders of magnitude more machines than humans. This level of scale requires automation.

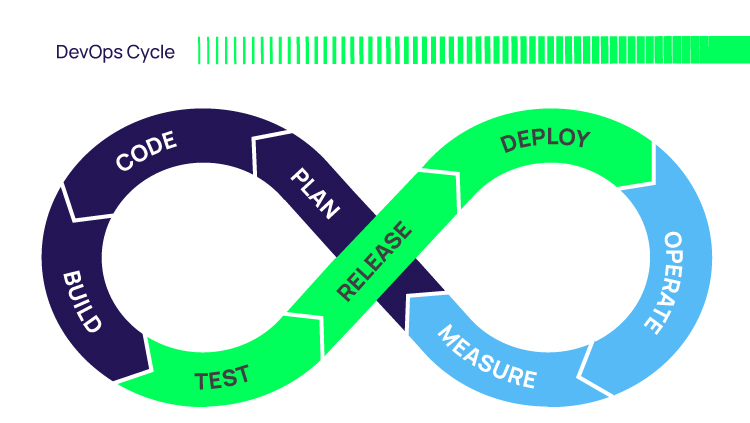

DevOps tools and workflows need privilege at almost every step. Cloning the repo, testing against a database, accessing external APIs, saving artifacts, sending updates - and we haven’t even gotten to production yet. In production, we have databases, caching layers, external APIs, microservices, replicated datacenters, logging, and auditing systems to connect.

So how do we keep these all secure without grinding to a halt? Let’s dive in:

Steps for effective DevOps security:

Phase 1: Identify secrets and store in vault. Access them using basic auth (user/pass, ClientID/secret)

In Phase 1 we’re finding secrets that need to be secured and identifying who has access. Look for them anywhere, but specifically in source code, resource files, environment variables, workflows, cloud configs, and k8s clusters. This will need tools and auditing to complete but can be built in phases. For source code, you can use a linting tool on each PR to find secrets, then move them to the vault. Once everything is vaulted, we can review all access and limit as much as possible. You may notice at this point we haven’t solved much. We just have secrets to access more secrets. It’s just another layer of abstraction! Have hope - we’re only beginning our journey!

Phase 2: Automate workflows using tools to integrate with your environment

In Phase 2 we consolidate and automate tools that require secrets. Rather than code using secrets directly, move that access to your GitHub Workflows, Kubernetes secrets, Jenkins, Azure DevOps, or other tools. This is good DevOps practice anyway and may be old news to your team. Split up vault information where possible, so not all the information to access secrets is in the same file. If the server can log in, but the workflow definition has the path to the secret, a malicious actor will need to breach more systems to access all the pieces to the puzzle.

Phase 3: Convert static secrets to dynamic secrets. Just-in-Time (JIT), short-lived creds

In phase 3 we lock down anything static and replace it with single-use or short-lived credentials. This is the machine equivalent to rotating secrets periodically. This is done by storing static credentials in the vault with very limited access. Those credentials are then used by a “virtual” secret that gets created on every read. This can be used for cloud services or databases not located in the cloud.

Phase 4: Address “Secret Zero” by using Multi-Factor-Authentication (MFA) for users and machines

In phase 4 we address how clients access the vault. This is to get rid of static credentials to access the vault. Use a certificate issued to a machine that’s delivered periodically and expired. Limit access by IP addresses. Use a CLI to get a session or single-use URL and pass it off to your tool, so even if the credentials get logged or taken, they are only valid for minutes or have already been used. An Even better solution would be no secret at all, like using your cloud IAM role to connect with the vault.

Phase 4 is where we focus on Multi-Factor Authentication (MFA) where we can. MFA for machines works differently than we humans are used to, but there are analogs. MFA can use things that you know, have, or are, to verify your identity. Machines have these characteristics, but the workflows look different. An EC2 instance has tools to help with identity, so let’s use them. An agent running on your machine is an authority that can retrieve a session and pass it along to an app. Private keys are unique ids and can be expired and reissued.

These phases are iterative in nature, just like the DevOps process. Move forward with what you know. It may be a single secret or app that goes through all the phases, or different teams that adopt faster than others.

The goal of all of this is to reduce your DevOps attack surface. It will increase your ability to pass audits, especially for compliance and cyber insurance. It will give your org clarity to move forward with what you do best and keep malicious actors out.

Manage DevOps secrets safely